Part 2. Brisbane Airport

Dr Bill Johnston

Using paired and un-paired t-tests to compare long timeseries of data observed in parallel by instruments housed in the same or different Stevenson screens at one site, or in screens located at different sites, is problematic. Part of the problem is that both tests assume that the air being monitored is the control variable. That air inside the screen is spatially and temporally homogeneous, which for a changeable, turbulent medium is not the case.

Irrespective of whether data are measured on the same day, paired t-tests require the same parcels of air to be monitored by both instruments 100% of the time. As instruments co-located in the same Stevenson screen are in different positions their data cannot be considered ‘paired’ in the sense required by the test. Likewise for instruments in separate screens, and especially if temperature at one site is compared with daily values measured some distance away at another.

As paired t-tests ascribe all variation to subjects (the instruments), and none to the response variable (the air) test outcomes are seriously biased compared to un-paired tests, where variation is ascribed more generally to both the subjects and the response.

The paired t-test compares the mean of the differences between subjects with zero, whereas the un-paired test compares subject means with each other. If the tests find a low probability (P) that that the mean difference is zero, or that subject means are the same, typically less than (P<) 0.05, 5% or 1 in 20, it can be concluded that subjects differ in their response (i.e., the difference is significant). Should probability be less than 0.01 (P<0.01 = 1% or 1 in 100) the between-subject difference is highly significant. However, significance itself does not ensure that the size of difference is meaningful in the overall scheme of things.

Assumptions

All statistical tests are based on underlying assumptions that ensure results are trustworthy and unbiased. The main assumption for is that differences in the case of paired tests, and for unpaired tests, data sequenced within treatment groups are independent meaning that data for one time are not serially correlated with data for other times. As timeseries embed seasonal cycles and in some cases trends, steps must be taken to identify and mitigate autocorrelation prior to undertaking either test.

A second, but less important assumption for large datasets, is that data are distributed within a bell-shaped normal distribution envelope with most observations clustered around the mean and the remainder diminishing in number towards the tails.

Finally, a problem unique to large datasets is that the denominator in the t-test equation becomes diminishingly small as the number of daily samples increase. Consequently, the t‑statistic becomes exponentially large, together with the likelihood of finding significant differences that are too small to be meaningful. In statistical parlance this is known as Type1 error – the fallacy of declaring significance for differences that do not matter. Such differences could be due to single aberrations or outliers for instance.

A protocol

Using a parallel dataset related to a site move at Townsville airport in December 1994, a protocol has been developed to assist avoiding pitfalls in applying t-tests to timeseries of parallel data. At the outset, an estimate of effect size, determined as the raw data difference divided by the standard deviation (Cohens d) assesses if the difference between instruments/sites is likely to be meaningful. An excel workbook was provided with step-by-step instructions for calculating day-of-year (1-366) averages that define the annual cycle, constructing a look-up table and deducting respective values from data thereby producing de-seasoned anomalies. Anomalies are differenced as an additional variable (Site2 minus Site1, which is the control).

Having prepared the data, graphical analysis of their properties, including autocorrelation function (ACF) plots, daily data distributions, probability density function (PDF) plots, and inspection of anomaly differences assist in determining which data to compare (raw data or anomaly data). The dataset that most closely matches the underlying assumptions of independence and normality should be chosen and where autocorrelation is unavoidable, randomised data subsets offer a way forward. (Randomisation may be done in Excel and subsets of increasing size used in the analysis.)

Most analyses can be undertaken using the freely available statistical application PAST from the University of Oslo: https://www.nhm.uio.no/english/research/resources/past/ Specific stages of the analysis have been referenced to pages in the PAST manual.

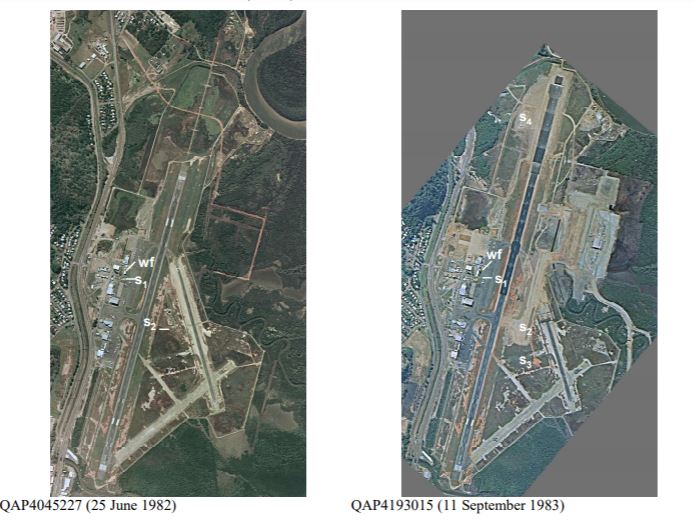

The Brisbane Study

The Brisbane study replicates the previous Townsville study, with the aim of showing that protocols are robust. While the Townsville study compared thermometer and automatic weather station maxima measured in 60-litre screens located 172m apart, the Brisbane study compared Tmax for two AWS each with 60-litre screens, 3.2 km apart, increasing the likelihood that site-related differences would be significant.

While the effect size for Brisbane was triflingly small (Cohens d = 0.07), and the difference between data-pairs stabilised at about 940 sub-samples, a significant difference between sites of 0.25oC was found when the number of random sample-pairs exceeded about 1,600. Illustrating the statistical fallacy of excessive sample numbers, differences became significant because the dominator in the test equation (the pooled standard error) declined as sample size increased, not because the difference widened. PDF plots suggested it was not until the effect size exceeded 0.2, that simulated distributions showed a clear separation such that the difference between Series1 and Series2 of 0.62oC could be regarded as both significant and meaningful in the overall scheme of things.

Importantly, the trade-off between significance and effect size is central to avoiding the trap of drawing conclusions based on statistical tests alone.

Dr Bill Johnston

4 June 2023

Two important links – find out more

First Link: The page you have just read is the basic cover story for the full paper. If you are stimulated to find out more, please link through to the full paper – a scientific Report in downloadable pdf format. This Report contains far more detail including photographs, diagrams, graphs and data and will make compelling reading for those truly interested in the issue.

Click here to access a full pdf report containing detailed analysis and graphs

Second Link: This link will take you to a downloadable Excel spreadsheet containing a vast number of data used in researching this paper. The data supports the Full Report.

Click here to download a full Excel data pack containing the data used in this research